https://sputniknews.in/20231111/deepfakes-new-weapon-to-extort-blackmail--harass-women-5334319.html

Deepfakes: New Weapon to Extort, Blackmail & Harass Women

Deepfakes: New Weapon to Extort, Blackmail & Harass Women

Sputnik India

The deepfake video and photo of Indian actors have raised an alarm about the misuse of artificial intelligence (AI) for spreading fake news, blackmailing, extortion, & harassment.

2023-11-11T19:31+0530

2023-11-11T19:31+0530

2023-11-11T20:22+0530

sputnik exclusives

india

crime

sonia gandhi

celebrity

bollywood

science & tech

cutting-edge technology

cyber warfare

cyber fraud

https://cdn1.img.sputniknews.in/img/07e7/0b/0b/5357498_0:188:2971:1859_1920x0_80_0_0_e47b7e4b665c8fe1a55dafaf3deb1284.jpg

The 27-year-old actress Rashmika Mandanna, on Monday, was in for a shock when she saw herself trending on social media for her deepfake video in which her face was morphed onto a British-Indian influencer who was wearing a black outfit and could be seen entering a lift.Another deepfake incident involving a scene from the highly anticipated Bollywood movie "Tiger 3" has miffed actor Katrina Kaif, 40, on Tuesday. The actor's picture, in which she can be seen engaged in a towel fight with a stunt woman, was maliciously edited using AI, resulting in an inappropriate portrayal.Mandanna, who attained worldwide fame for playing Srivalli in 'Pushpa' movie, said that "we need to address this as a community and with urgency before more of us are affected by such identity theft."Serious Threat to WomenSinger Chinmayi Sripaada voiced her support for Rashmika, emphasizing that deepfake technology poses a significant threat to women, enabling individuals to exploit and manipulate their image in order to harass, extort, blackmail and even sexually assault them.Not just celebrities, but even the common man is being targeted with deepfakes.A number of incidents were reported last year in which women, unable to repay borrowed money from loan companies or apps, were subjected to harassment by collectors who manipulated their images into obscene photos.Murthy opined that the combination of fraudulent loan apps and deepfake technology adds an extra layer of sophistication to these scams.Cyber crime consultant and information security professional, Mukesh Chaudhary, founder and CEO of CyberOps Infosec, told Sputnik India that deepfake is also used to modify pictures of politicians and objectionable comments.Chaudhary shared that he was handling a deepfake case in which former Congress President Sonia Gandhi was accused of wanting to convert India into a Christian nation.Due to the rising cases of deepfake, Murthy said that the media industry is anticipated to face unique challenges in the coming days in identifying the genuineness of the information being circulated.Chaudhary opined that deepfake cases have been in trend for the last 10 years and were widely used in pornography videos.SolutionWhile several celebrities have called for legal action against the culprits, Union Minister Rajeev Chandrasekhar said that platforms should swiftly and decisively combat misinformation. Murthy told Sputnik India that curbing the misuse of deepfake technology is a complex task requiring legal frameworks, technological advancements, and awareness.

https://sputniknews.in/20231106/actress-deepfake-video-goes-viral-public-calls-for-legal-action-5270362.html

india

Sputnik India

feedback.hindi@sputniknews.com

+74956456601

MIA „Rossiya Segodnya“

2023

Sangeeta Yadav

https://cdn1.img.sputniknews.in/img/07e6/0c/0f/110602_0:0:641:640_100x100_80_0_0_c298016a79eb02ef8caa9d1f688c12a5.jpg

Sangeeta Yadav

https://cdn1.img.sputniknews.in/img/07e6/0c/0f/110602_0:0:641:640_100x100_80_0_0_c298016a79eb02ef8caa9d1f688c12a5.jpg

News

en_IN

Sputnik India

feedback.hindi@sputniknews.com

+74956456601

MIA „Rossiya Segodnya“

Sputnik India

feedback.hindi@sputniknews.com

+74956456601

MIA „Rossiya Segodnya“

Sangeeta Yadav

https://cdn1.img.sputniknews.in/img/07e6/0c/0f/110602_0:0:641:640_100x100_80_0_0_c298016a79eb02ef8caa9d1f688c12a5.jpg

actor rashmika mandanna, trending on social media, deepfake video, british-indian influencer, deepfake incident, bollywood movie, tiger 3, what is deep fake, katrina kaif, misuse of artificial intelligence, deepfake, deepfake weapon, extort, harass, blackmail, singer chinmayi sripaada, deepfake technology, threat to women, harassment, extortion, blackmailing, obscene photos, pornography, celebrity pornography, celebrity porn, spreading fake news, manipulate public opinion, venkatesh murthy k, senior director at data security council of india (dsci), fraudulent loan apps, cyber crime consultant, information security professional, mukesh chaudhary, founder and ceo of cyberops infosec, politicians, objectionable comments, former congress president sonia gandhi, convert india into a christian nation, book ‘how to convert india into a christian nation’, bible, jesus christ, legal action, union minister rajeev chandrasekhar, legal frameworks, technological advancements, awareness, cyber crime, cyber war, cyber security

actor rashmika mandanna, trending on social media, deepfake video, british-indian influencer, deepfake incident, bollywood movie, tiger 3, what is deep fake, katrina kaif, misuse of artificial intelligence, deepfake, deepfake weapon, extort, harass, blackmail, singer chinmayi sripaada, deepfake technology, threat to women, harassment, extortion, blackmailing, obscene photos, pornography, celebrity pornography, celebrity porn, spreading fake news, manipulate public opinion, venkatesh murthy k, senior director at data security council of india (dsci), fraudulent loan apps, cyber crime consultant, information security professional, mukesh chaudhary, founder and ceo of cyberops infosec, politicians, objectionable comments, former congress president sonia gandhi, convert india into a christian nation, book ‘how to convert india into a christian nation’, bible, jesus christ, legal action, union minister rajeev chandrasekhar, legal frameworks, technological advancements, awareness, cyber crime, cyber war, cyber security

Deepfakes: New Weapon to Extort, Blackmail & Harass Women

19:31 11.11.2023 (Updated: 20:22 11.11.2023) Deepfake videos and photos of Indian actors have raised alarms about the misuse of artificial intelligence (AI) to spread fake news, blackmail, extortion and harassment.

The 27-year-old actress Rashmika Mandanna, on Monday, was in for a shock when she saw herself trending on social media for her deepfake video in which her face was morphed onto a British-Indian influencer who was wearing a black outfit and could be seen entering a lift.

Another

deepfake incident involving a scene from the highly anticipated Bollywood movie "Tiger 3" has miffed actor Katrina Kaif, 40, on Tuesday.

The actor's picture, in which she can be seen engaged in a towel fight with a stunt woman, was maliciously edited using AI, resulting in an inappropriate portrayal.

Mandanna, who attained worldwide fame for playing

Srivalli in 'Pushpa' movie, said that "we need to address this as a community and with urgency before more of us are affected by such

identity theft."Singer

Chinmayi Sripaada voiced her support for Rashmika, emphasizing that deepfake technology poses a significant threat to women, enabling individuals to exploit and manipulate their image in order to harass, extort, blackmail and even sexually assault them.

Not just celebrities, but even the common man is being targeted with deepfakes.

A number of incidents were reported last year in which women, unable to repay borrowed money from loan companies or apps, were subjected to harassment by collectors who manipulated their images into obscene photos.

"The consequences of deepfake are not limited to morphed explicit images targeting celebrities; it can go to the extent of causing distress in society by spreading fake news and may even attempt to manipulate public opinion," Venkatesh Murthy K, senior director at Data Security Council of India (DSCI), told Sputnik India.

Murthy opined that the combination of fraudulent loan apps and deepfake technology adds an extra layer of sophistication to these scams.

"Deepfake technology has been leveraged by malicious actors, including fraudulent loan app vendors who have already gained access to the personal information of the victim at the time of installation of the loan app on a smartphone," Murthy explained.

Cyber crime consultant and

information security professional,

Mukesh Chaudhary, founder and CEO of CyberOps Infosec, told Sputnik India that deepfake is also used to modify pictures of politicians and objectionable comments.

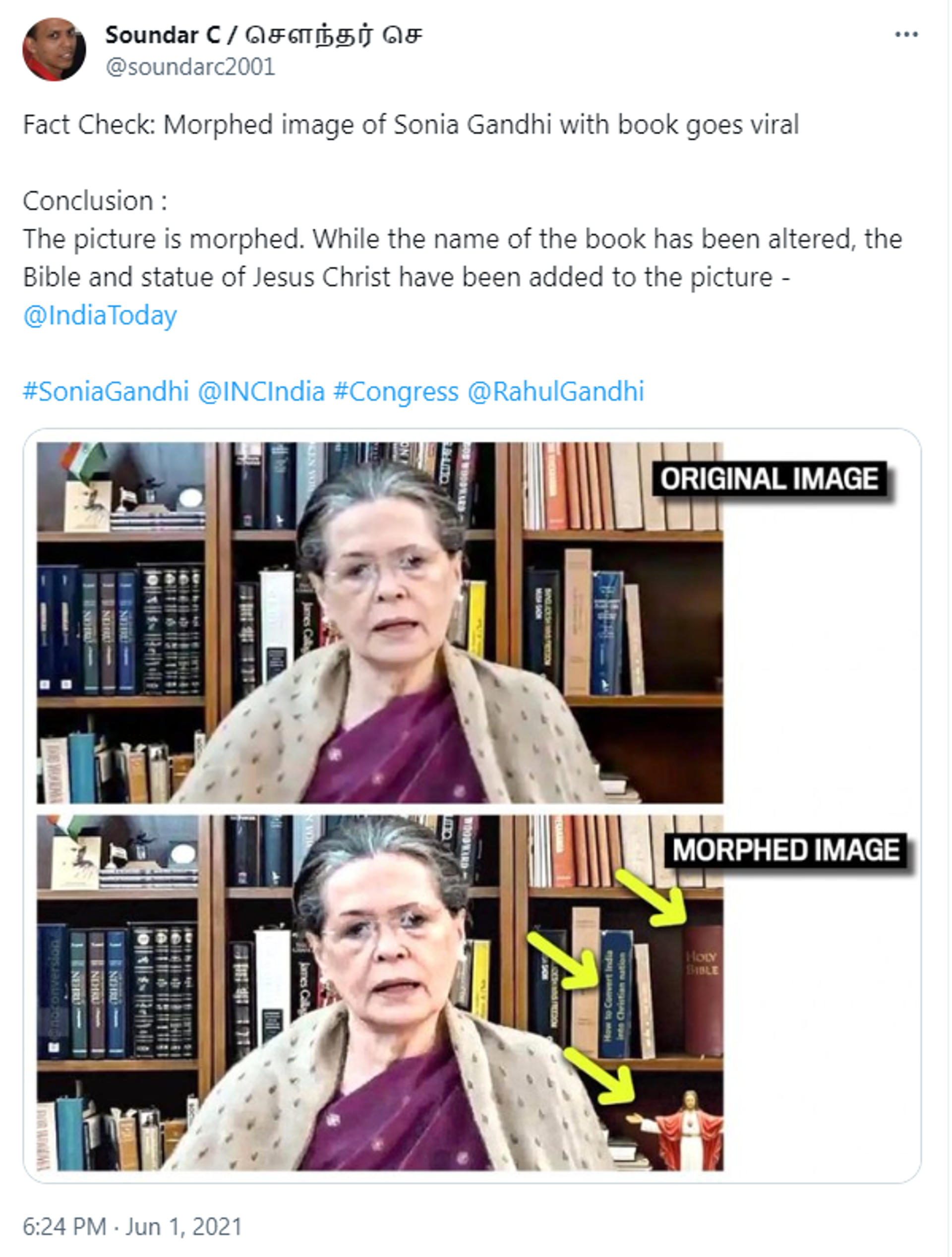

Chaudhary shared that he was handling a deepfake case in which former Congress President

Sonia Gandhi was accused of wanting to convert India into a Christian nation.

“In a picture, Sonia Gandhi was shown sitting in front of the bookshelf which had the controversial book ‘How to Convert India into a Christian Nation’ along with the Bible and Jesus Christ’s statue. Further investigation revealed that it was a morphed image. The picture was smartly tempered and modified and people started sharing it a lot. The real picture was later made public in which there was no bible, Jesus Christ idol, and the book’s title was modified,” Chaudhary said.

Due to the rising cases of deepfake, Murthy said that the media industry is anticipated to face unique challenges in the coming days in identifying the genuineness of the information being circulated.

Chaudhary opined that deepfake cases have been in trend for the last 10 years and were widely used in pornography videos.

“If someone visits a pornography website, searches for any particular celebrity porn video or leaked video, but finds no content related to it, they would switch to another site. The website admin would get to know what people are searching. To attract good traffic and get a low bounce rate, they would morph the faces of celebrities and public figures onto a porn video. This deepfake has existed for the past many years,” Chaudhary said.

While several celebrities have called for legal action against the culprits, Union Minister Rajeev Chandrasekhar said that platforms should swiftly and decisively combat misinformation.

Singer Chinmayi Sripaada calls for "a nationwide awareness campaign that can kickstart urgently to educate the general public about the dangers of deepfakes for girls and to report incidents instead of taking matters into their own hands."

Murthy told Sputnik India that curbing the misuse of deepfake technology is a complex task requiring legal frameworks,

technological advancements, and awareness.

"It's not a one-time task and demands collaboration between governments, technology developers, and the general public. There is a need to invest significantly in research technologies that can detect and identify deepfake content, and more importantly, it should be easily accessible to people," Murthy said.